A Thorough Guide to Deploying LiveKit on AWS EKS

August 1, 2023

by Elen Muradyan

by Elen Muradyan

Poor documentation and ambiguous examples can really slow the development process, and nobody really wants that, right? This article is to help you have a smoother experience and potentially fill in the gaps that vague documentation could have left that I have unfortunately encountered.

Why LiveKit and EKS?

LiveKit is an open-source platform providing a modern, end-to-end WebRTC stack to build live video and audio applications. It can handle large-scale deployments and provides built-in load balancing and automatic scaling capabilities.

LiveKit can be deployed on a virtual machine. But what happens when the number of users increases significantly? Well, you will need to handle it manually, e.g., manually provisioning more instances to distribute the workload among them and attaching a load balancer manually. You will also have to handle system updates and security patches all by yourself. Yet, the capacities of your VM host may still not be enough and let you down once there is a need to scale up. One particular case is scaling up video calls.

This is where deployment on Kubernetes comes in handy. You will not need to scale anything manually; your application will accommodate the growing number of users, and the performance will not be affected. Additionally, Kubernetes optimizes resource utilization by distributing containers across available nodes. It also provides load-balancing mechanisms and easy deployment.

There are several Kubernetes services offered by various providers, and in this article, we will focus on the Elastic Kubernetes Service (EKS) provided by AWS. EKS offers users seamless integration with a range of AWS services, including Secrets Manager and ElastiCache, which enable secure storage of secrets and distributed deployments. With AWS managing the operational complexities, deploying Livekit on AWS EKS becomes a straightforward and secure process.

So, let’s proceed with deploying Livekit to AWS Elastic Kubernetes Service together!

Setup

First of all, to ensure a smooth deployment process, you will need some tools. Since I am on a Windows machine, I used Chocolatey; you should use a package manager suitable for your OS.

choco install -y eksctl

choco install kubernetes-helm

helm repo add livekit https://helm.livekit.io

helm repo add eks https://aws.github.io/eks-charts

npm i -g aws

npm i -g aws-cdk

You will also need AWS CDK Bootstrap. It provisions resources for the AWS CDK before deploying your apps into an AWS environment. You can find more details here. Run the following command to bootstrap.

cdk bootstrap aws://ACCOUNT-NUMBER/REGIONCreating a Cluster

Once you’re all set, you can finally create your Kubernetes cluster. There are two options to do that; one way is to do it manually, and the second(and the more preferable) way is to use eksctl. Not only does it create a cluster, but it also creates a dedicated VPC beforehand and also attaches a managed nodegroup to your cluster afterward. Run the following command.

eksctl create cluster --name my-cluster --region region-codeThe command has more options that can be found in the official documentation.

Creating a Load Balancer Controller

The next step is setting up a load balancer controller. But before that, you should create an IAM OIDC provider for your cluster. IAM OIDC identity providers are entities in IAM that describe an external identity provider (IdP) service that supports the OpenID Connect (OIDC) standard, such as Google or Salesforce. To create it, run the following command.

eksctl utils associate-iam-oidc-provider --cluster my-cluster --approveNow that the provider is set, we can create the Load Balancer Controller. AWS Load Balancer Controller helps to manage Elastic Load Balancers for a Kubernetes cluster. It provisions Application and Network Load Balancers to satisfy Ingress and Service resources in Kubernetes.

You will also need to create an IAM Role and attach it to a Service Account that you will also need to create for your controller. This ensures your LB Controller is authorized to interact with AWS resources, specifically load balancers, on your behalf.

Download the policy file with one of the following commands depending on your OS.

curl -O https://raw.githubusercontent.com/kubernetes-sigs/aws-load-balancer-controller/v2.4.7/docs/install/iam_policy.json

Invoke-WebRequest -Uri "https://raw.githubusercontent.com/kubernetes-sigs/aws-load-balancer-controller/v2.4.7/docs/install/iam_policy.json" -OutFile "C:\PathToFile\iam_policy.json"Use AWS CLI to create the policy with the downloaded JSON file.

aws iam create-policy \

--policy-name AWSLoadBalancerControllerIAMPolicy \

--policy-document file://iam_policy.jsonNow, you need to attach it to a service account; once again, you can do this manually or using eksctl. I used the latter. If you are using Bash, replace the backticks with backslashes.

eksctl create iamserviceaccount \

--cluster=my-cluster \

--namespace=kube-system \

--name=aws-load-balancer-controller \

--role-name AmazonEKSLoadBalancerControllerRole \

--attach-policy-arn=arn:aws:iam::ACCOUNT-NUMBER:policy/AWSLoadBalancerControllerIAMPolicy \

--approveYou can now deploy your Load Balancer Controller using a Helm chart. Helm is a convenient package manager for Kubernetes.

helm install aws-load-balancer-controller eks/aws-load-balancer-controller \

-n kube-system \

--set clusterName=my-cluster \

--set serviceAccount.create=false \

--set serviceAccount.name=aws-load-balancer-controllerOnce you have the controller, what you need to do before deploying your application is take care of the necessary TLS certificates. First, register DNS records for your service and the TURN server so you can use them to create the required certificates.

Certificate Management

The TURN server needs a private certificate in the form of a Kubernetes secret, and the ALB will need a public certificate. Let’s discuss the private certificate in this step. You can either import your private TLS certificate as a Kubernetes secret or store it in SecretsManager and access it from your cluster (eventually, it will still be a Kubernetes secret).

To import the certificate, run this command.

kubectl create secret tls <name> --cert <cert-file> --key <key-file> --namespace <namespace>As I mentioned, another approach is using Secrets Manager; this way, you keep most of your stuff in the cloud and leverage AWS resources. For this, you need to install Secrets Store CSI Driver and ASCP. You should create a manifest file to create a pod and specify a volume that should be mounted in that pod using the Secrets Store CSI Driver. Then the Kubelet will invoke CSI Driver to mount the volume; the driver will create the volume and invoke ASPC to get secrets from the AWS Secrets Manager.

Run the following Helm commands to install Secrets Store CSI Driver and ASPC.

helm repo add secrets-store-csi-driver https://kubernetes-sigs.github.io/secrets-store-csi-driver/charts

helm install -n kube-system csi-secrets-store secrets-store-csi-driver/secrets-store-csi-driver --set syncSecret.enabled=true --set enableSecretRotation=true

helm repo add aws-secrets-manager https://aws.github.io/secrets-store-csi-driver-provider-aws

helm install -n kube-system secrets-provider-aws aws-secrets-manager/secrets-store-csi-driver-provider-awsCreate a service account to retrieve data from the AWS Secrets Manager securely. Create the following policy.

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": [

"secretsmanager:GetSecretValue",

"secretsmanager:DescribeSecret"

],

"Resource": [

"SECRET_ARN"

]

}

]

}And attach it to your service account with eksctl.

eksctl create iamserviceaccount --name SERVICE_ACCOUNT --region="REGION" --cluster "CLUSTERNAME" --attach-policy-arn "POLICY_ARN" --approve --override-existing-serviceaccountsApart from the deployment manifest, you will also need a SecretProviderClass manifest to specify which secrets you want to mount. Ensure the OBJECT_NAME is the same as the name of the secret in AWS Secrets Manager. In this case, the private key and the certificate are stored in the same secret.

apiVersion: secrets-store.csi.x-k8s.io/v1

kind: SecretProviderClass

metadata:

name: CLASS_NAME

namespace: NAMESPACE

spec:

provider: aws

secretObjects:

- secretName: SECRET_NAME

type: kubernetes.io/tls

data:

#- objectName: <objectName> or <objectAlias>

- objectName: SECRET_NAME

key: tls.crt

- objectName: SECRET_NAME

key: tls.key

parameters:

objects: |

- objectName: OBJECT_NAME

objectType: secretsmanagerAnd finally, create the deployment file to mount secrets. The secretProviderClass should be the same as the name of the class in the previous manifest, and the serviceAccountName should be the name of the service account you have previously created.

apiVersion: apps/v1

kind: Deployment

metadata:

name: EXAMPLE_NAME

namespace: NAMESPACE

spec:

replicas: 1

selector:

matchLabels:

app: EXAMPLE_NAME

template:

metadata:

labels:

app: EXAMPLE_NAME

spec:

serviceAccountName: SERVICE_ACCOUNT

containers:

- name: nginx

image: nginx:1.14.2

ports:

- containerPort: 80

volumeMounts:

- name: VOLUME_NAME

mountPath: /mnt/secrets

readOnly: true

volumes:

- name: VOLUME_NAME

csi:

driver: secrets-store.csi.k8s.io

readOnly: true

volumeAttributes:

secretProviderClass: CLASS_NAMENow create the resources by running this command with the names of the manifest files.

kubectl apply -f secretproviderclassmanifest.yaml -f deploymentmanifest.yamlRun this command to make sure your secrets have been successfully synced.

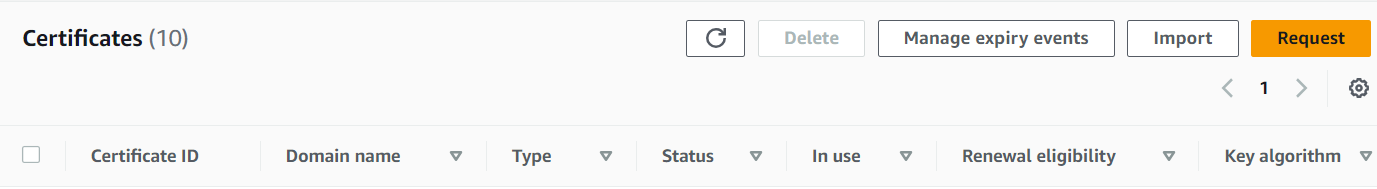

kubectl get secrets -n NAMESPACECreate a public certificate in AWS for your ALB using AWS Certificate Manager; this step is relatively easy. Click on the Request button in the AWS console, specify the name of the DNS record, and you’re done.

Note: You might need to add a CNAME record to Route53 for your certificate to pass the validation and be issued.

Deployment and Firewall

Now that you have your certificates, you can finally deploy your LiveKit service. For a distributed setup, you can use Redis, but you can omit that step for now. This is an example of a values.yaml file to use for your deployment. You can generate your API keys and tokens via this link. For the turn part, use this configuration and the name of the secret you have previously created.

turn:

enabled: true

domain: DNS_RECORD

tls_port: 3478

udp_port: 443

secretName: SECRET_NAME

serviceType: "LoadBalancer"And for the load balancer, choose the type alb. Run the following command to deploy your application.

helm install <instance_name> livekit/livekit-server --namespace <namespace> --values values.yamlRun this command to ensure your deployment pod is alive and well.

kubectl get pods -n NAMESPACEIn case you make changes to your values.yaml file, you can run these commands to apply them to your deployment.

helm repo update

helm upgrade <instance_name> livekit/livekit-server --namespace <namespace> --values values.yamlAfter the deployment, the service account you have created for your Load Balancer Controller will create an ALB and NLB on your behalf. Set their DNS names as aliases in your Route53 records. Now that you’re all set add the following inbound rules to the security group of your EKS nodes.

Congratulations, you have successfully deployed your LiveKit service on EKS!

Share this post