n8n vs OpenAI Agent Builder

October 23, 2025

by Vahe Karufanyan

by Vahe Karufanyan

We built the same customer support automation workflow twice. First in n8n as a full end-to-end inbox automation, that’s up and running. Then we tried to achieve the same with OpenAI’s new Agent Builder.

- n8n is an open-source automation platform with a lot of integrations

- Agent Builder is OpenAI’s agent platform. It gives an easy-to-use agent canvas with built-in tools mostly locked in to the OpenAI ecosystem

Goal

Create a no-code automation workflow that will trigger on a new customer support email received, will generate an answer using the inbox history as a knowledge base, and add as a draft reply in the customer support inbox for the team to review, edit if necessary and send.

Building both workflows

n8n

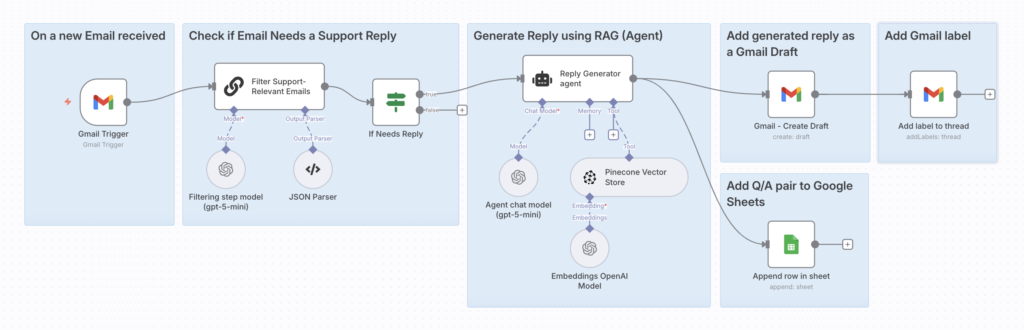

Starting with n8n. As mentioned above, n8n is really rich with integrations, both officially made by the n8n team and also made by other independent developers or companies as the project is open source. In n8n there is a built-in Gmail integration with a lot of trigger and action nodes. Trigger is the starting point of the workflow. We used Gmail trigger, which looks for new messages in the inbox on your specified interval (every minute, every hour, etc.) and starts the workflow.

The next step is an LLM node that uses a lightweight model and lets pass only the real customer support messages and filters out marketing emails, etc. After filtering we are passing the user’s message to an AI Agent node. The AI Agent consists of several parts, an LLM of choice, and as the goal was to use the older threads in the inbox as knowledge base, we uploaded all of the previous question and answer pairs to a vector database. We chose Pinecone here for its existing and easy-to-use integration in n8n, and we did that by creating a separate n8n workflow that composes question and answer pairs from Gmail inbox threads, then uploads them to Pinecone vector store using an existing Pinecone node (for the full breakdown of how we did it you can read this blog post). So the Agent can use this tool that will receive the user’s message as input and will return the best matching answers to the agent to use when composing the draft answer.

The final step is adding the composed message to Gmail as a draft, and of course there is already an existing node for that. We also used a Gmail labeling node to label the drafts as agent-generated. And here we have an end-to-end working automation for customer support Gmail in n8n.

OpenAI’s Agent Builder

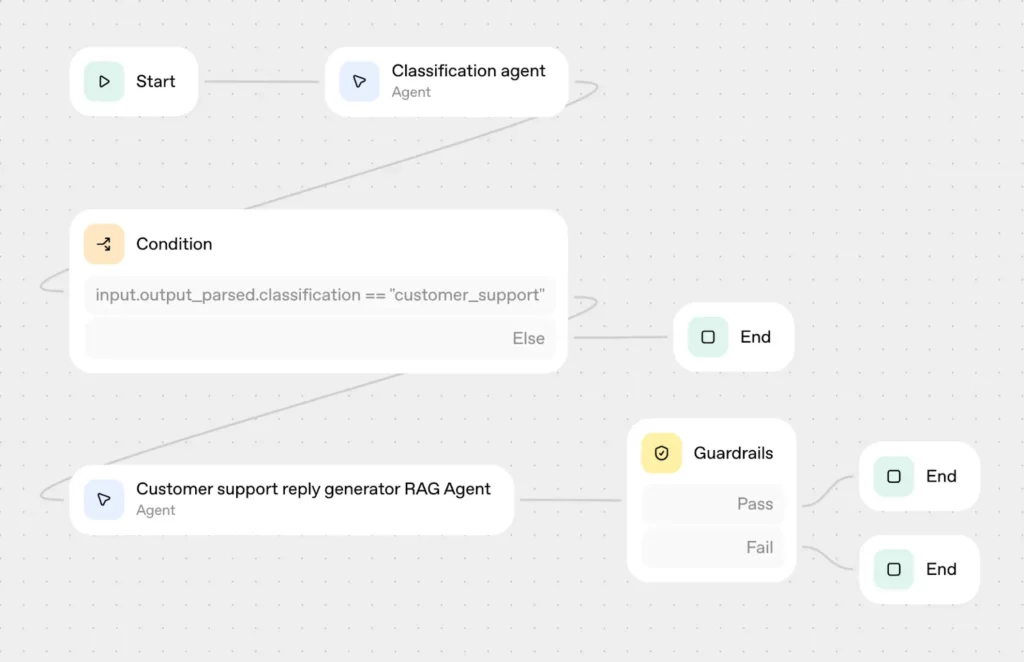

Now we’ll go through what we did using OpenAI’s Agent Builder. Unlike n8n, Agent Builder doesn’t have a ton of integrations, but it has almost anything connected to their ecosystem. From the first impressions I’d say it has a more user-friendly interface for non-technical users. Unfortunately, there is no direct Gmail integration here, and we were not able to set up a trigger in the Agent Builder workflow itself on new received messages, so if you want to do that here you’ll need to set up a watcher externally that’ll trigger this workflow whenever a new message arrives in your Gmail inbox.

Here as well, after the input, we used a filtering step using an agent node, this is very similar to what we did in n8n, but it’s worth noting that here you can only choose OpenAI models, in n8n you have a wide variety of choices, and even possible to set up a backup model (in case the main one is down).

After the filtering, we are passing the message to the main Agent node, which will compose the answer. Agent nodes can use tools, and here we had two approaches: to use the already existing knowledge base in the Pinecone vector store or create a new one in the OpenAI vector store. There is no Pinecone integration in Agent Builder, but the agent can use a custom MCP server that we would need to create and deploy to connect the agent with Pinecone, which is a path we chose not to follow, as we want a no-code workflow. So we chose to upload the knowledge base to OpenAI’s vector store. As we already had a similar automation that composes question-answer pairs and uploads them to Pinecone vector store in n8n, we decided to duplicate and modify it a little so it’ll upload to OpenAI vector store. When we had our knowledge base ready, it was very easy to connect to the agent. OpenAI made the internal tooling very easy to use – the only thing is you’ll most likely be tied to their ecosystem. After finalizing the prompt, we have the agent ready.

For the next step, we used Guardrails node, which is a preprogrammed node that has Personally identifiable information (PII), Moderation, Jailbreak and Hallucination options. We turned on the Hallucination option toggle to check if the agent’s generated answer contains AI hallucinations. This is a really powerful and unique node. If you want to achieve something like this in n8n you’d need to create a custom agent for that, but in the Agent Builder everything is ready for you to use.

As mentioned before, the agent node tools include MCPs, and there are some existing MPCs that you can use, and one of them is a Gmail MCP, but we couldn’t find a way to draft a message in Gmail using that as the Agent Builder is quite new – maybe the upcoming updates will include that. For the time being, we routed the final output to a simple output, letting the support team manually create and review drafts in Gmail.

What this means in practice

- If you want a no-code or low-code pipeline that starts with Gmail and ends with a Gmail draft plus logs, n8n makes that trivial.

- If you want an agent that can retrieve context and write consistently with safety checks, Agent Builder makes that fast to iterate and easy to ship as a chat experience or widget.

Comparison that generalizes beyond our case

Integrations and reach

- n8n: Large catalog of ready nodes for Gmail, Google Workspace, Slack, CRMs, databases, Pinecone, webhooks, schedulers, custom code, and more. If a node is missing, the HTTP node or community nodes fill the gap. Good fit when you need many services in a single flow.

- Agent Builder: Native File Search and vector stores, safety guardrails, and an MCP-based path to third-party tools. It is improving quickly, but today it “forces” you to stay inside OpenAI’s ecosystem unless you set up MCP or write a tool.

Triggers and workflow shape

- n8n: Event-driven and background friendly. Webhooks, polling, schedules, queues, retries, rate limits, error branches, approvals, multi-branch logic, and full run history. This is the “plumbing” you need for production.

- Agent Builder: Chat-first entry point with a simple Start node, branching via conditions, and agent nodes that do the reasoning. Great for the middle of a flow. For background or event triggers, you will likely front it with your own code or an orchestrator.

Data and retrieval

- n8n: Bring any vector database. Pinecone works out of the box. You control data location and updates.

- Agent Builder: OpenAI vector store and File Search are built in and frictionless. If you want Pinecone, use MCP or a custom tool. Guardrails include a hallucinations check tied to your store.

Development and debugging experience

- n8n: Strong developer ergonomics for automation. Run individual nodes, pin or mock inputs, replay partial executions, inspect each step’s data and errors, search execution history, and add retries and dead letter paths. Great for diagnosing production issues and iterating safely.

- Agent Builder: Strong ergonomics for the agent brain. Quick preview runs, clean versioning, evals, and guardrails. Lighter on pipeline grade debugging because it is focused on reasoning and retrieval rather than full-stack orchestration.

Models and flexibility

- n8n: Model agnostic. Use OpenAI, Anthropic, Google, Mistral, Groq, OpenRouter, and more. Easy to mix and match, and to set fallbacks.

- Agent Builder: Designed for OpenAI models. You can export agent logic to code and call other things, but the sweet spot is to use OpenAI end-to-end.

Iteration speed and quality

- n8n: Fast to build full pipelines. Retrieval plus prompting works well and is easy to tune. You own the pieces.

- Agent Builder: Very fast for agent behavior. Preview runs, versioning, evals, and guardrails help tighten quality and reduce drift. ChatKit and Widget Studio make the interface and embedding very simple.

Governance and safety

- n8n: You assemble safety with prompts, filters, policies, and your own data controls. Self-hosting is a plus for compliance.

- Agent Builder: Guardrails for PII, moderation, jailbreaks, and hallucinations are built in. Evals and trace grading help you measure and improve the agent.

Deployment and UI

- n8n: Ships actions back to your tools. If you need a front end, you bring one or pair it with a UI product.

- Agent Builder: Ships a chat/widget out of the box via ChatKit and Widget Studio. Good for internal assistants and embedded support. Less about pushing actions to external systems by itself.

Costs

In our tests, costs were similar because we used the same model and did comparable work. In general:

- n8n: You pay for the subscription (or self-host) plus whatever APIs you call. Self-hosting can optimize spending.

- Agent Builder: You pay standard OpenAI API usage. The platform pieces are included. If you rely heavily on vector storage or guardrails, budget for that usage.

Where our experience fits the bigger picture

- Our n8n workflow did the full Gmail pipeline with Pinecone retrieval and drafted answers. It felt like a finished operational system.

- Our Agent Builder workflow recreated the agent brain. Same model, same idea, plus the hallucinations guardrail. It was quick to tune and felt consistent. It did not cover Gmail triggers or drafts by itself.

- We did not try to glue them together. This was a clean comparison.

Choose n8n if:

- You want end-to-end no-code or low-code automation across real systems without writing connectors

- You need triggers, retries, logs, labels, and reporting

- You care about self-hosting, model choice, or data residency

- Your UI is secondary, and your goal is “do the task in my stack”

Choose Agent Builder if:

- You want a focused agent with built-in retrieval and safety

- You want to iterate quickly with preview, guardrails, and evals

- You plan to embed an agent in a site or product with ChatKit and widgets

- You are comfortable staying in the OpenAI ecosystem or investing in MCP connectors

Share this post